When the Nvidia GeForce RTX 4090 was introduced with an eye-watering $1,600 price ticket, memes unfold like wildfire. Whereas $1,600 is a little bit an excessive amount of for many players to spend on a single part (most PC construct budgets I see are lower than that for the entire PC). I couldn’t assist however be intrigued on the potential efficiency enhancements for my work—, the 3D and AI-accelerated duties I spend most of my day doing as a part of managing the EposVox YouTube channel, as an alternative of gaming.

Spoiler alert: The GeForce RTX 4090’s content material creation efficiency is magical. In fairly a number of circumstances, the sometimes nonsense “2X efficiency improve” is definitely true. However not in all places.

Let’s dig in.

Our take a look at setup

Most of my benchmarking was carried out on this take a look at bench:

- Intel Core i9-12900k CPU

- 32GB Corsair Vengeance DDR5 5200MT/s RAM

- ASUS ROG STRIX Z690-E Gaming Wifi Motherboard

- EVGA G3 850W PSU

- Supply recordsdata saved on a PCIe gen 4 NVMe SSD

My aims have been to see how a lot of an improve the RTX 4090 could be over the earlier technology GeForce RTX 3090, in addition to the RTX Titan (the cardboard I used to be primarily engaged on earlier than). The RTX 3090 actually noticed minimal enhancements over the RTX Titan for my use circumstances, so I wasn’t certain if the 4090 would actually be a giant leap. For some extra hardcore testing later as I’ll point out, testing was executed on this take a look at bench.

- AMD Threadripper Professional 3975WX CPU

- 256GB Kingston ECC DDR4 RAM

- ASUS WRX80 SAGE Motherboard

- BeQuiet 1400W PSU

- Supply recordsdata saved on a PCIe gen 4 NVMe SSD

Every take a look at featured every GPU in the identical config as to not combine outcomes.

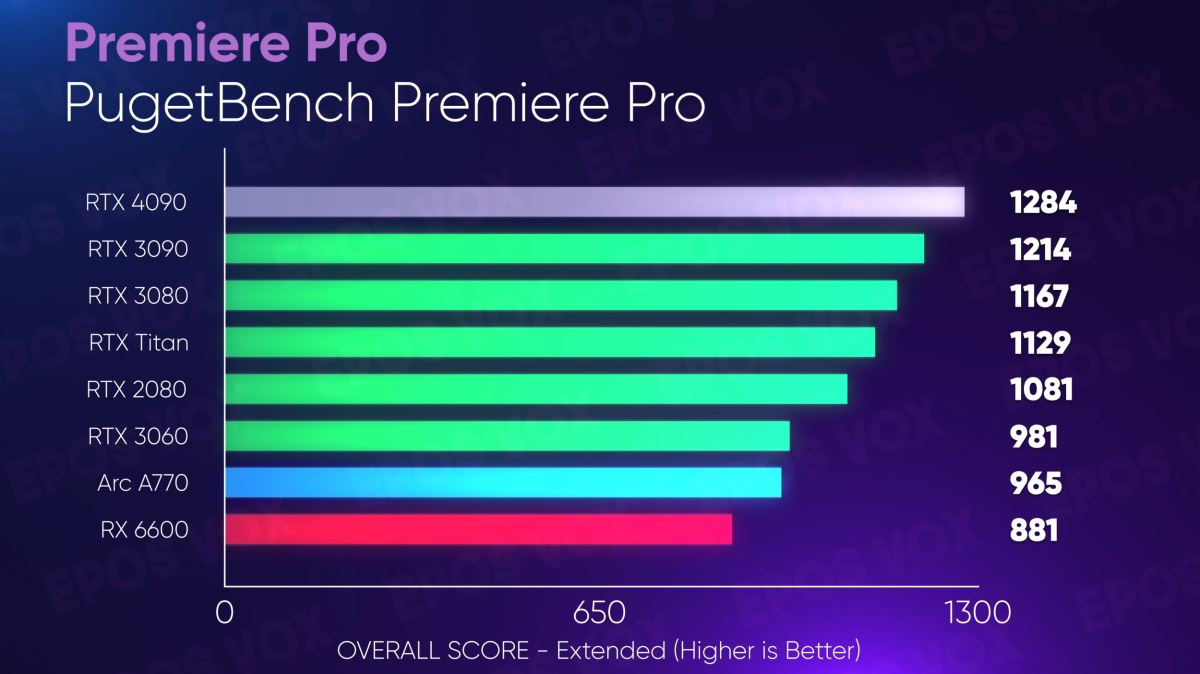

Video manufacturing

My day job is, after all, creating YouTube content material, so one of many first issues I needed to take a look at could be the advantages I’d see for creating video content material. Utilizing PugetBench from the workstation builders at Puget Programs, Adobe Premiere Professional sees very minimal efficiency enchancment with the RTX 4090 (as to be anticipated at this level).

Adam Taylor/IDG

Adam Taylor/IDG

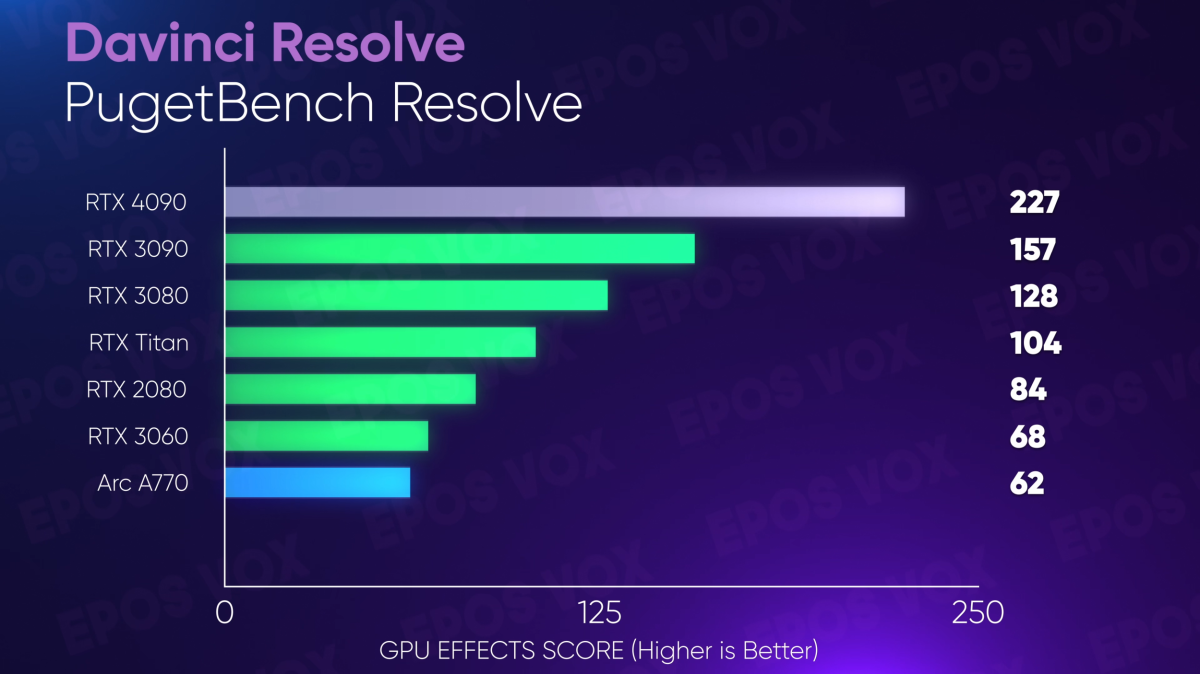

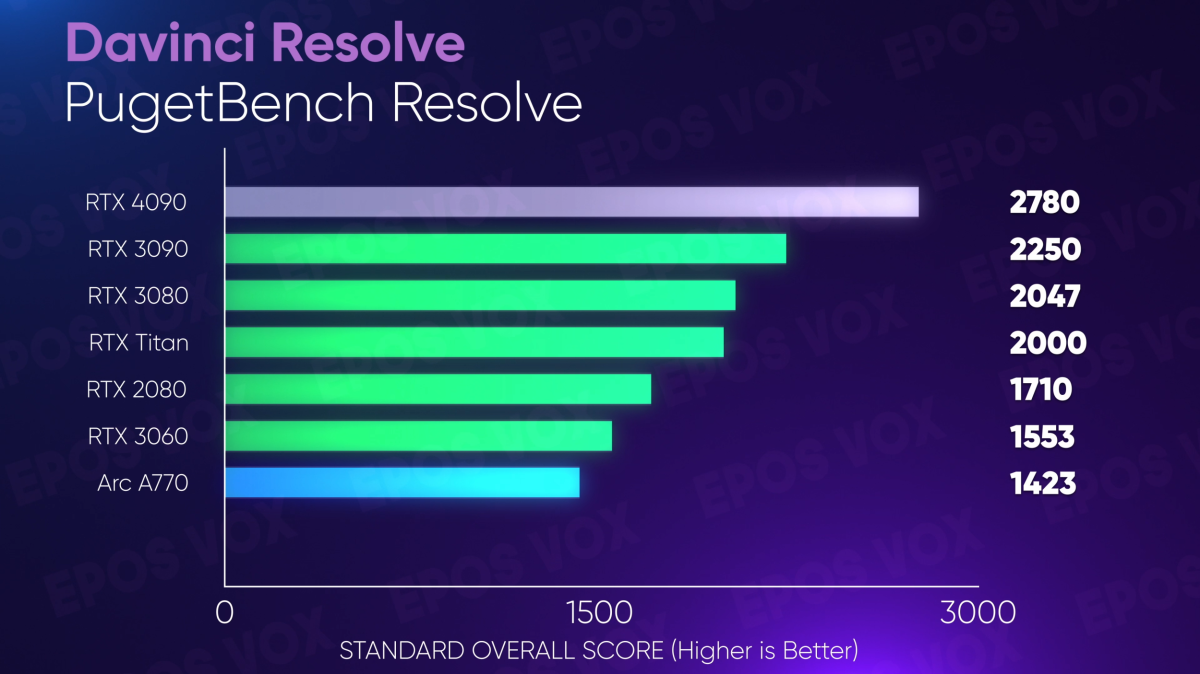

BlackMagic DaVinci Resolve, nevertheless, noticed vital efficiency enhancements throughout the board over each the RTX 3090 and RTX Titan. This is smart, as Resolve is much extra optimized for GPU workflows than Premiere Professional. Renders have been a lot quicker thanks each to the upper 3D compute on results, but additionally the quicker encoding {hardware} onboard—and the overall playback and workflow was rather more “snappier” feeling and responsive.

Adam Taylor/IDG

Adam Taylor/IDG

I’ve been enhancing with the GeForce RTX 4090 for a number of weeks now, and the expertise has been nice—although I needed to revert again to the general public launch of Resolve so I haven’t been capable of export utilizing the AV1 encoder for many of my movies.

Adam Taylor/IDG

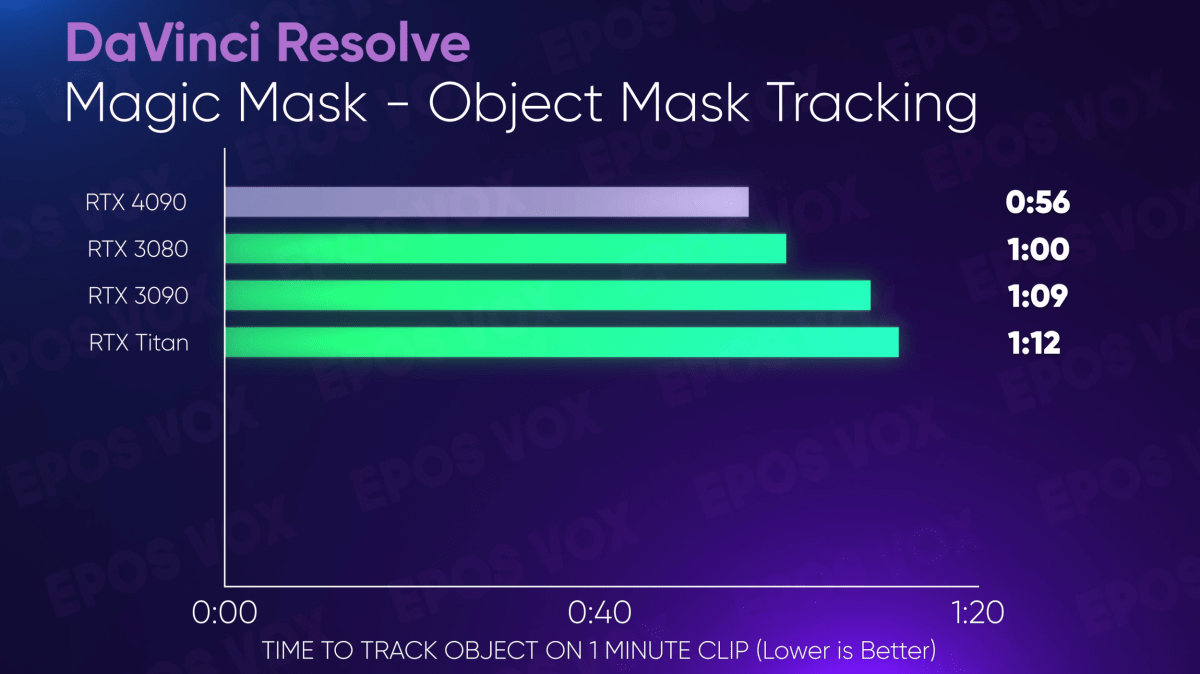

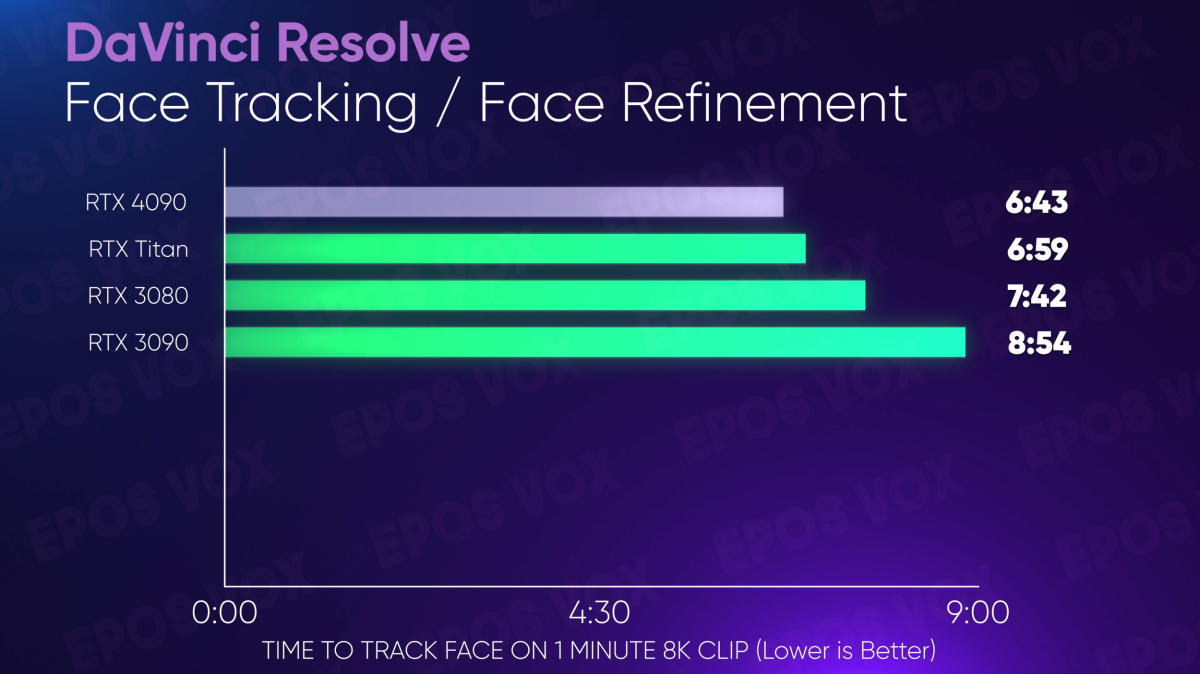

I additionally needed to check to see if the AI {hardware} enhancements would profit Resolve’s Magic Masks instrument for rotoscoping, or their face tracker for the Face Refinement plugin. Admittedly, I hoped to see extra enhancements from the RTX 4090 right here, however there may be an enchancment, which saves me time and is a win. These duties are tedious and sluggish, so any minutes I can shave off makes my life simpler. Maybe in time extra optimization might be executed particular to the brand new structure modifications in Lovelace (the RTX 40-series’ underlying GPU structure codename).

Adam Taylor/IDG

Adam Taylor/IDG

The efficiency in my unique Resolve benchmarks impressed me sufficient that I made a decision to construct a second-tier take a look at utilizing my Threadripper Professional workstation; rendering and exporting a 8K video with 8K RAW supply footage, a lot of results and Tremendous Scale (Resolve’s inner “good” upscaler) on 4K footage, and so forth. This mission isn’t any joke, the conventional high-tier gaming playing cards simply errored out as a result of their decrease VRAM portions couldn’t deal with the mission— this bumps the RTX 3060, 2080, and 3080 out of the working. However placing the 24GB VRAM monsters to the take a look at, the RTX 4090 exported the tasks an entire 8 minutes quicker than the take a look at. Eight minutes. That form of time scaling is recreation altering for single particular person workflows like mine.

Adam Taylor/IDG

Should you’re a excessive res or effect-heavy video editor, the RTX 4090 is already going to avoid wasting you hours of ready and slower working, proper out of the gate and we haven’t even talked about encoding speeds but.

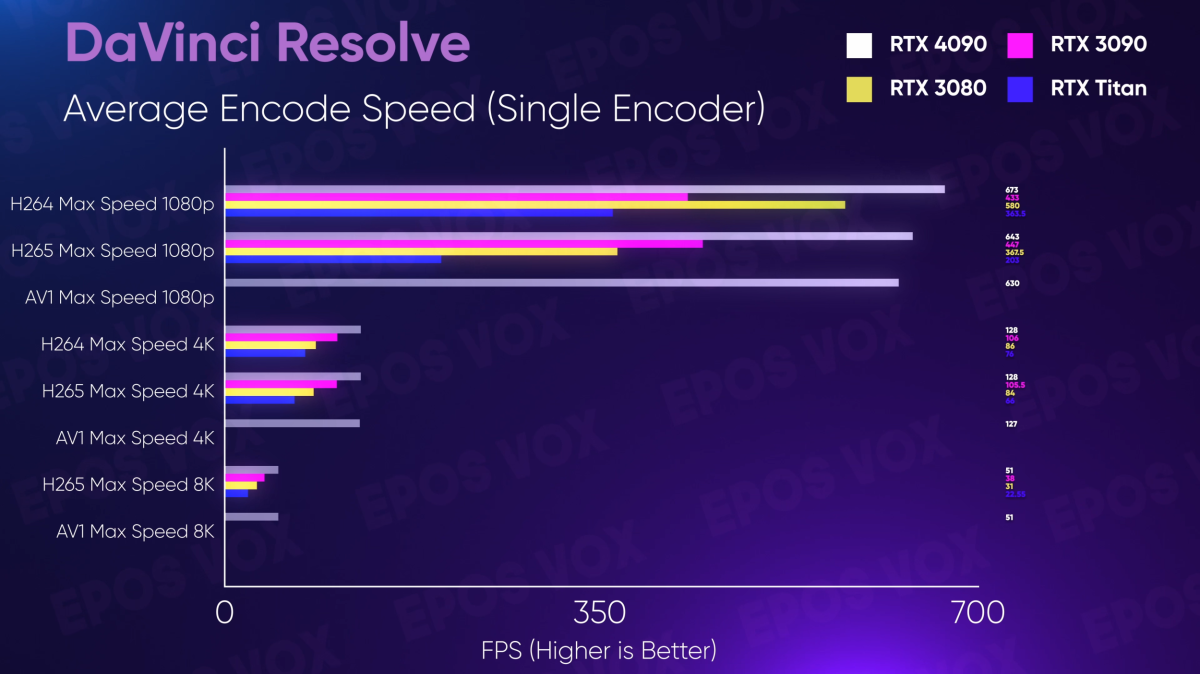

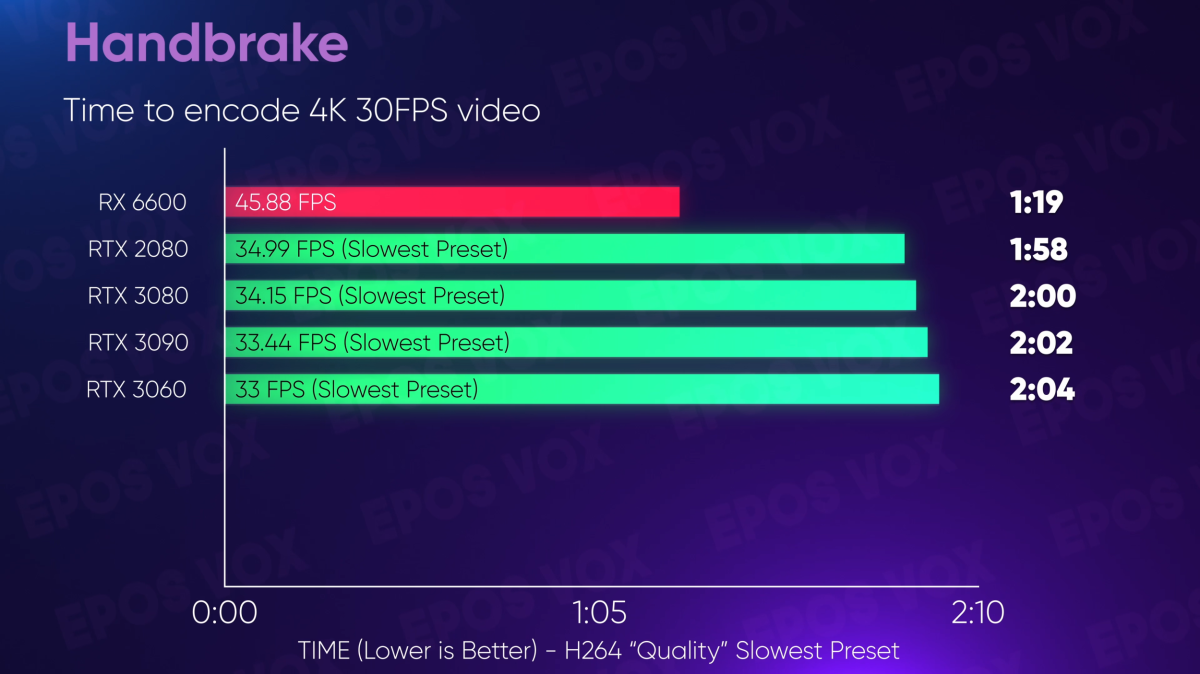

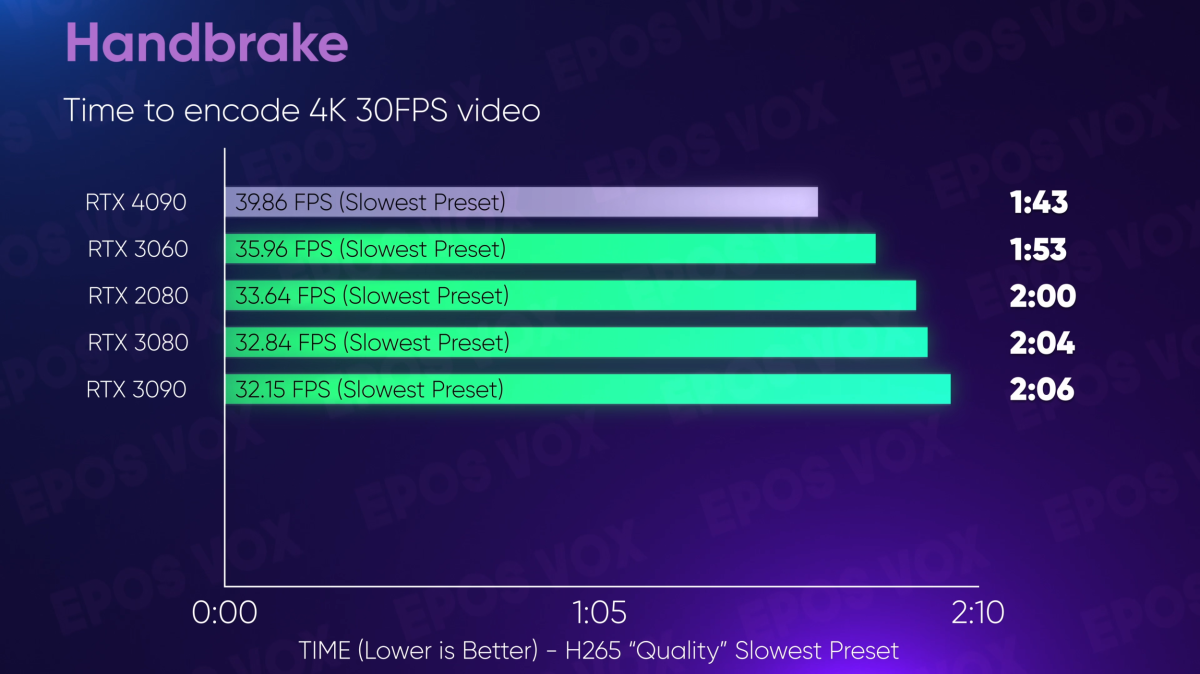

Video encoding

For merely transcoding video in H.264 and H.265, the GeForce RTX 4090 additionally simply runs laps round earlier Nvidia GPUs. H.265 is the one space the place AMD out-performs Nvidia in encoder velocity (although not essentially high quality) as ever because the Radeon 5000 GPUs, AMD’s HEVC encoder has been blazing quick.

Adam Taylor/IDG

The brand new Ada Lovelace structure additionally comes with new twin encoder chips that individually already run a good bit quicker for H.264 and H.265 encoding than Ampere and Turing, however in addition they encode AV1—the brand new, open-source video codec from the Alliance for Open Media.

Adam Taylor/IDG

AV1 is the way forward for web-streamed video, with most main corporations concerned in media streaming additionally being members of the consortium. The purpose is to create a extremely environment friendly (as in, higher high quality per bit) video codec that may meet the wants of the fashionable excessive decision, excessive body charge, and HDR streaming world, whereas avoiding the excessive licensing and patent prices related to H.265 (HEVC) and H.266 codecs. Intel was first to market with {hardware} AV1 encoders with their Arc GPUs as I lined for PCWorld right here—now Nvidia brings it to their GPUs.

I can not get fully correct high quality comparisons between Intel and Nvidia’s AV1 encoders but because of restricted software program assist. From the essential assessments I may do, Nvidia’s AV1 encodes are on par with Intel’s—however I’ve since discovered that the encoder implementations in even the software program I can use them in each may use some fine-tuning to finest characterize either side.

Efficiency-wise, AV1 performs about as quick as H.265/HEVC on the RTX 4090. Which is okay. However the brand new twin encoder chips enable each H.265 and AV1 for use to encode 8K60 video, or simply quicker 4K60 video. They do that by splitting up the video frames into horizontal halves, encoding the halves on the separate chips, after which stitching again collectively earlier than finalizing the stream. This feels like how Intel’s Hyper Encode was imagined to work—Hyper Encoder as an alternative separating GOPs (Group of Photos or frames) among the many iGPU and dGPU with Arc—however in all of my assessments, I solely discovered Hyper Encode to decelerate the method, relatively than dashing it up. (Plus it didn’t work with AV1.)

Streaming

On account of the aforementioned enhancements in encoder velocity, streaming and recording your display, digicam, or gameplay is a far, much better expertise. This comes with an replace to the NVENC encoder SDK inside OBS Studio, now presenting customers with 7 presets (akin to X264’s “CPU Utilization Presets”) scaling from P1 being the quickest/lowest high quality to P7 being the slowest/very best quality. In my testing on this video, P6 and P7 have been mainly the very same consequence on RTX 2000, 3000, and 4000 GPUs, and competed with X264 VerySlow in high quality.

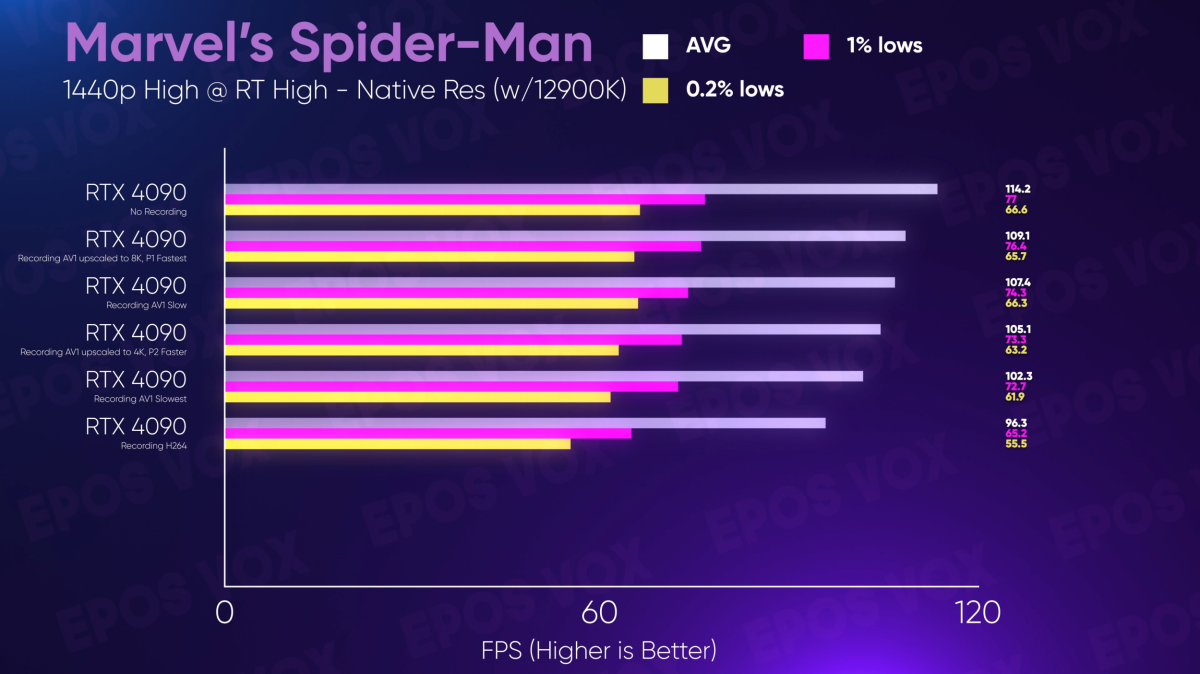

Whereas recreation streaming, I noticed principally the identical efficiency recording as different GPUs in Spider-Man Remastered (although different video games will see extra advantages) with H.264, however then encoding with AV1… had negligible impression on recreation efficiency in any respect. It was just about clear. You wouldn’t even know you have been recording, even on the very best high quality preset. I even had sufficient headroom to set OBS to an 8K canvas and upscale my 1440p recreation seize to 8k inside OBS and file utilizing the twin encoder chips, and nonetheless not see a major impression.

Adam Taylor/IDG

Sadly, whereas Nvidia’s Shadowplay characteristic does get 8K60 assist on Lovelace by way of the twin encoders, solely HEVC is supported presently. Hopefully AV1 might be carried out—and supported for all resolutions, as HEVC solely works for 8K or HDR as is—quickly.

I additionally discovered that the GeForce RTX 4090 is now quick sufficient to do fully lossless 4:4:4 HEVC recording at 4K 60FPS—one thing prior generations merely can not do. 4:4:4 chroma subsampling is vital for sustaining textual content readability and for retaining the picture intact when zooming in on small components like I do for movies, and at 4K it’s form of been a “white whale” of mine, because the throughput on RTX 2000/3000 hasn’t been sufficient or the OBS implementation of 4:4:4 isn’t optimized sufficient. Sadly 4:4:4 isn’t potential in AV1 on these playing cards, in any respect.

Photograph enhancing

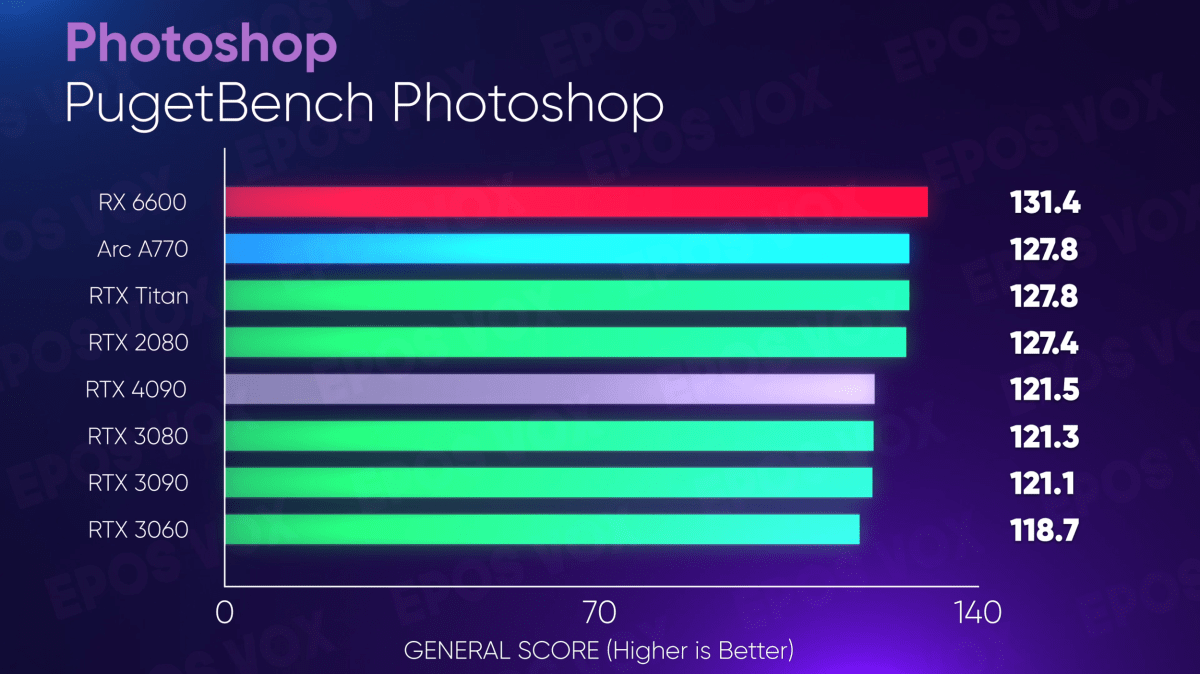

Photograph enhancing sees just about zero enchancment on the GeForce RTX 4090. There’s a slight rating improve on the Adobe Photoshop PugetBench assessments versus earlier generations, however nothing price shopping for a brand new card over.

Adam Taylor/IDG

Adam Taylor/IDG

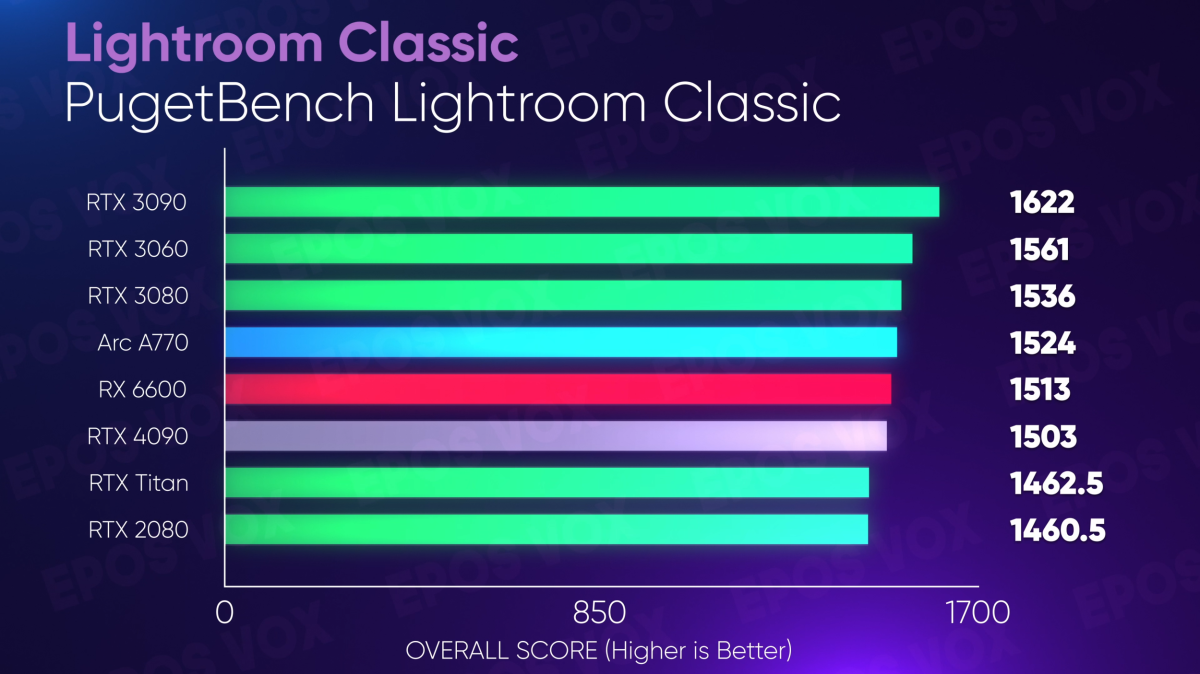

Similar goes for Lightroom Basic. Disgrace.

Adam Taylor/IDG

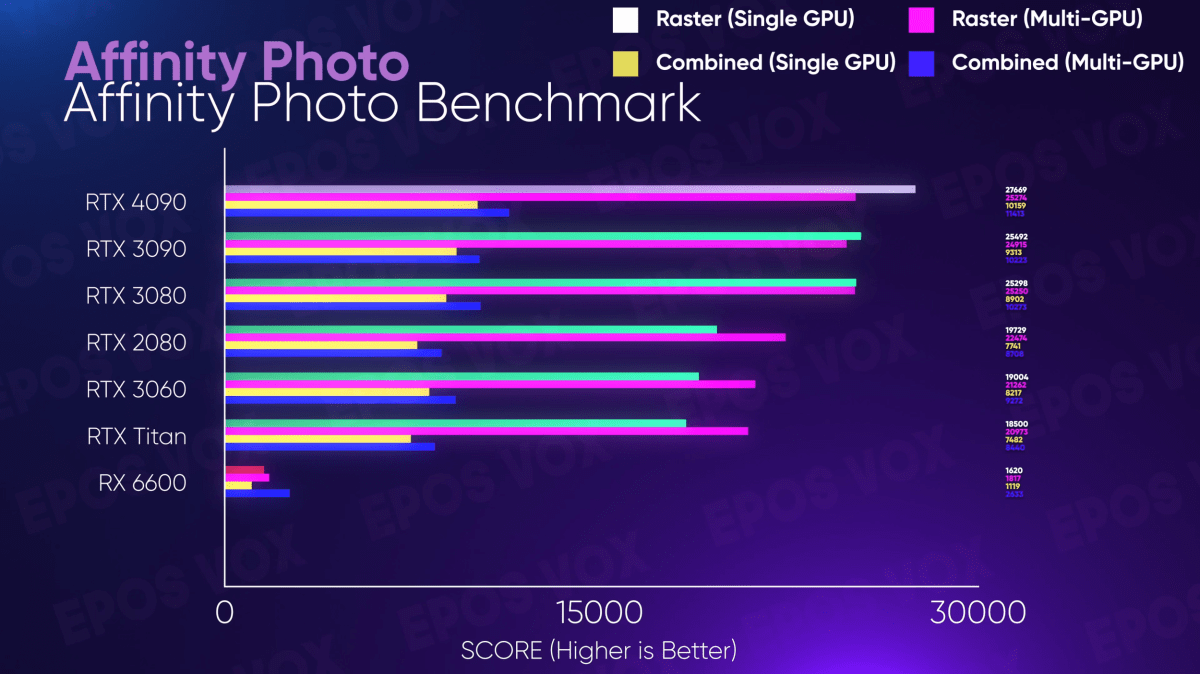

However when you’re an Affinity Photograph consumer, the RTX 4090 far outperforms different GPUs, I’m undecided whether or not to interpret that Affinity is kind of optimized on this case.

A.I.

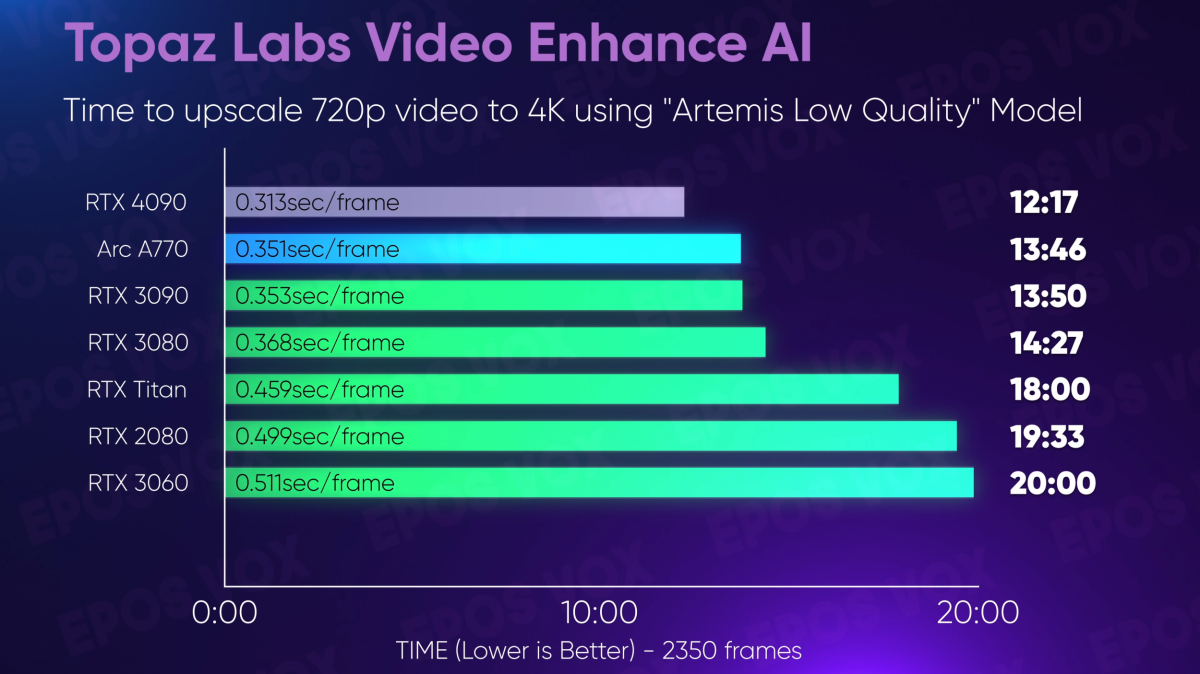

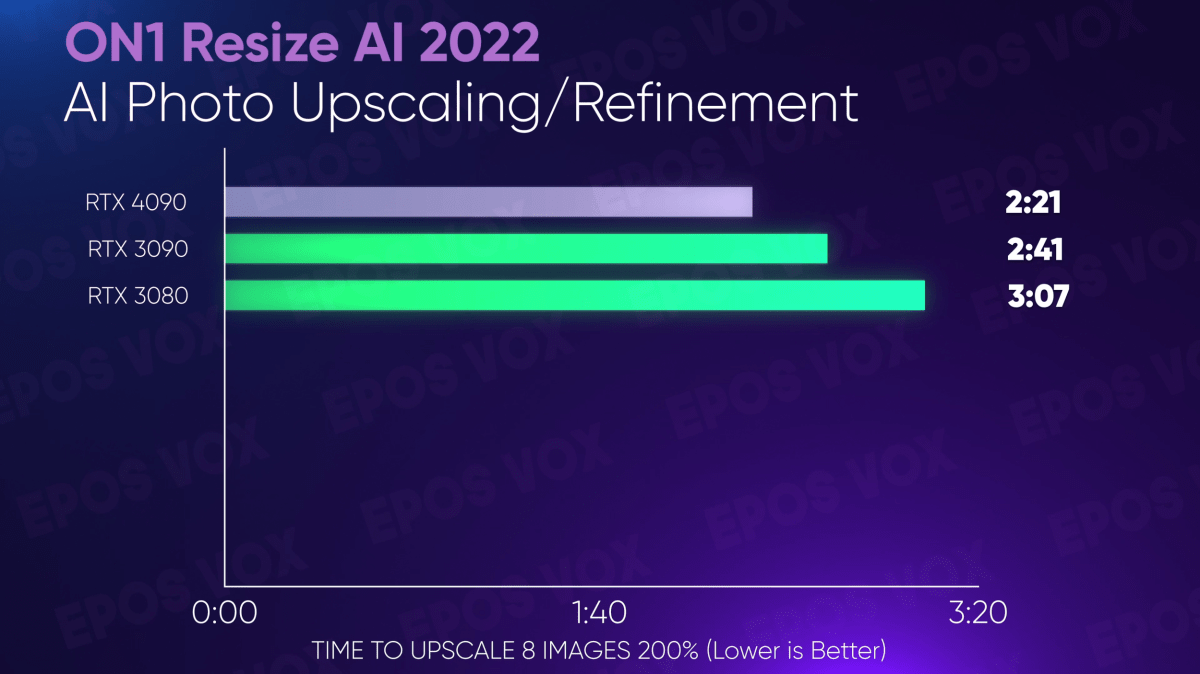

AI is all the trend as of late, and AI upscalers are in excessive demand proper now. Theoretically, the GeForce RTX 4090’s improved AI {hardware} would profit these workflows—and we principally see this ring true. The RTX 4090 tops the charts for quickest upscaling in Topaz Labs Video Improve AI and Gigapixel, in addition to ON1 Resize AI 2022.

Adam Taylor/IDG

Adam Taylor/IDG

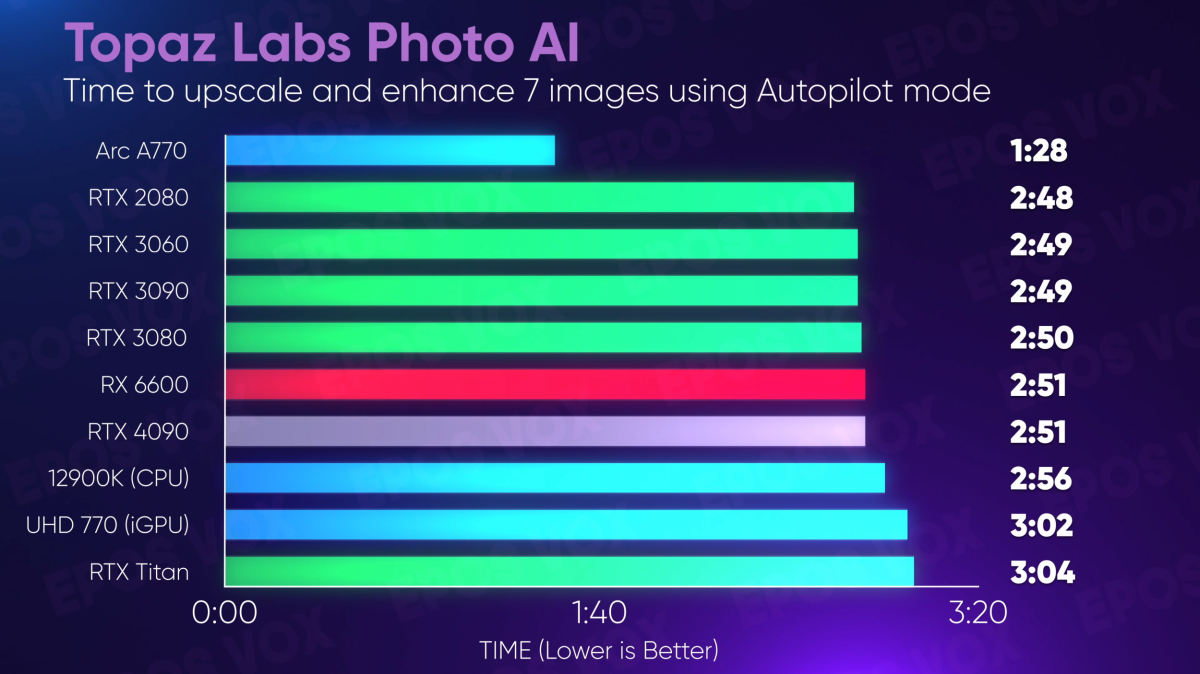

However Topaz’s new PhotoAI app sees weirdly low efficiency in all Nvidia playing cards. I’ve been advised this can be a bug, however a repair has but to be distributed.

Adam Taylor/IDG

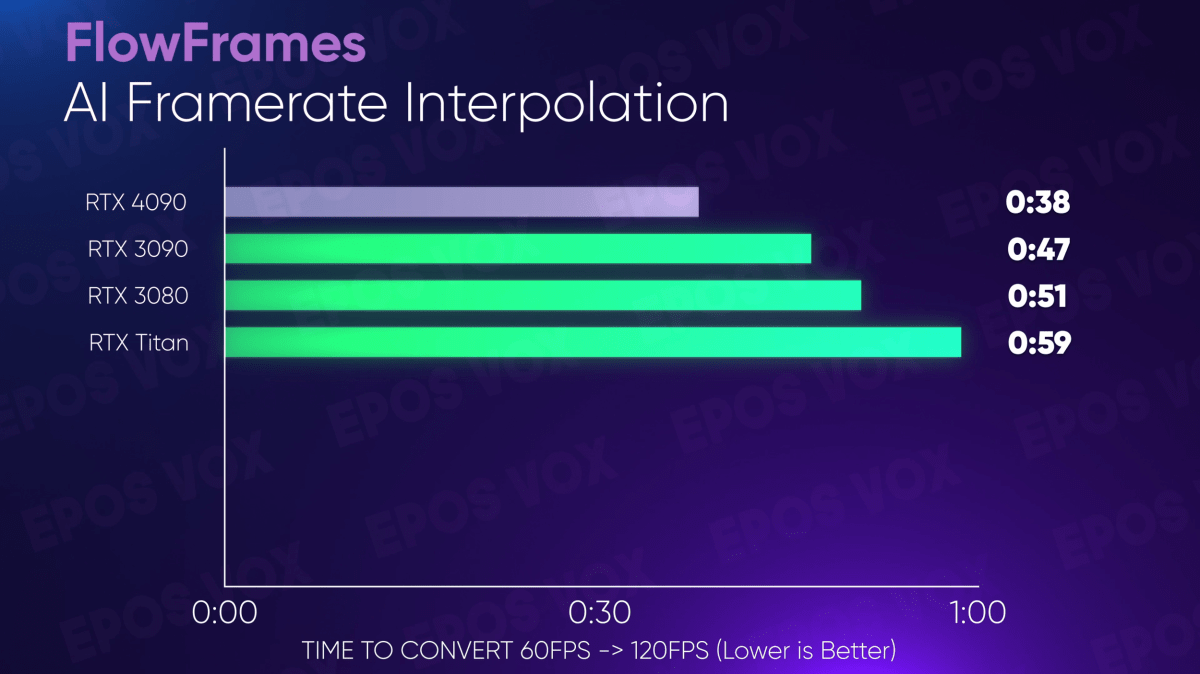

Utilizing FlowFrames to AI interpolate 60FPS footage to 120FPS for slow-mo utilization, the RTX 4090 sees a 20 p.c speed-up in comparison with the RTX 4090. That is good as it’s, however I’ve been advised by customers within the FlowFrames Discord server that this might theoretically scale extra as optimizations for Lovelace are developed.

Adam Taylor/IDG

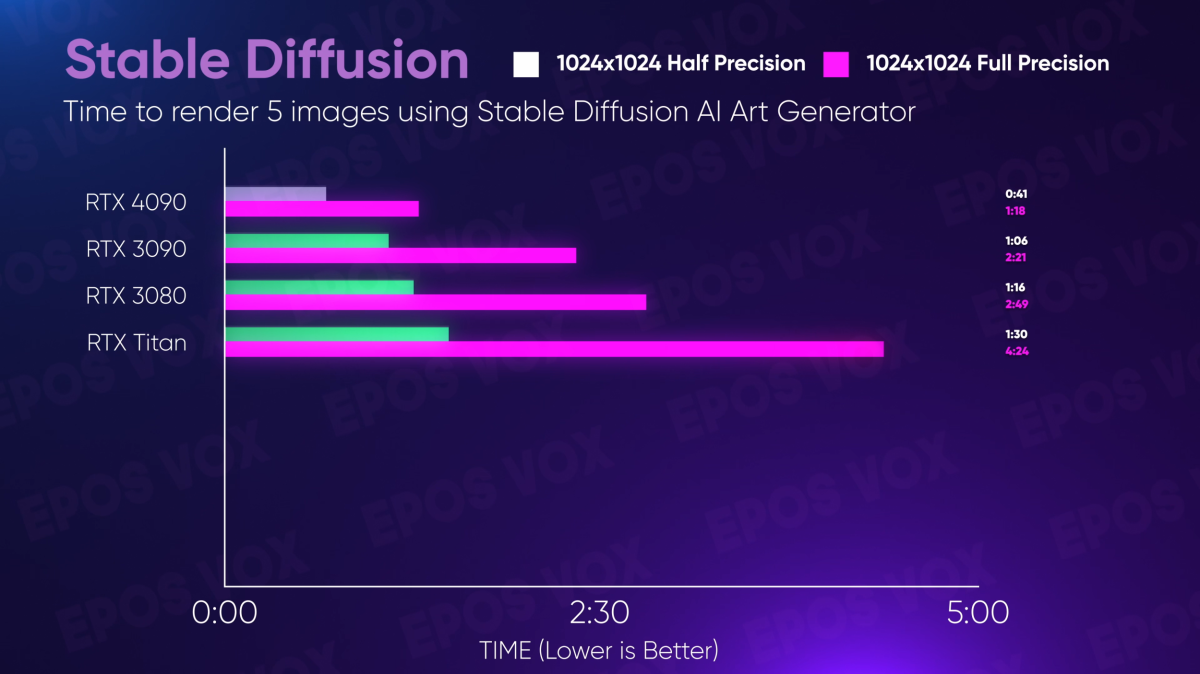

What about producing AI Artwork? I examined N00mkrad’s Secure Diffusion GUI and located that the GeForce RTX 4090 blew away all earlier GPUs in each half and full-precision technology—and as soon as once more have been advised the outcomes “must be” greater, even. Thrilling occasions.

Adam Taylor/IDG

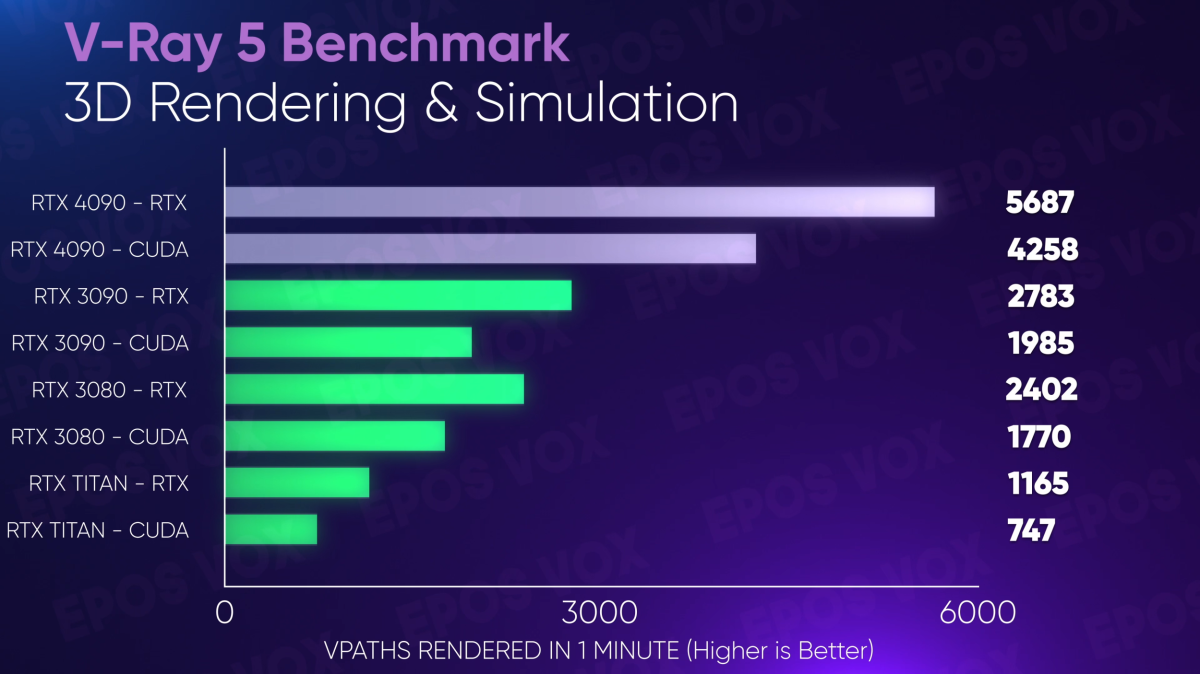

3D rendering

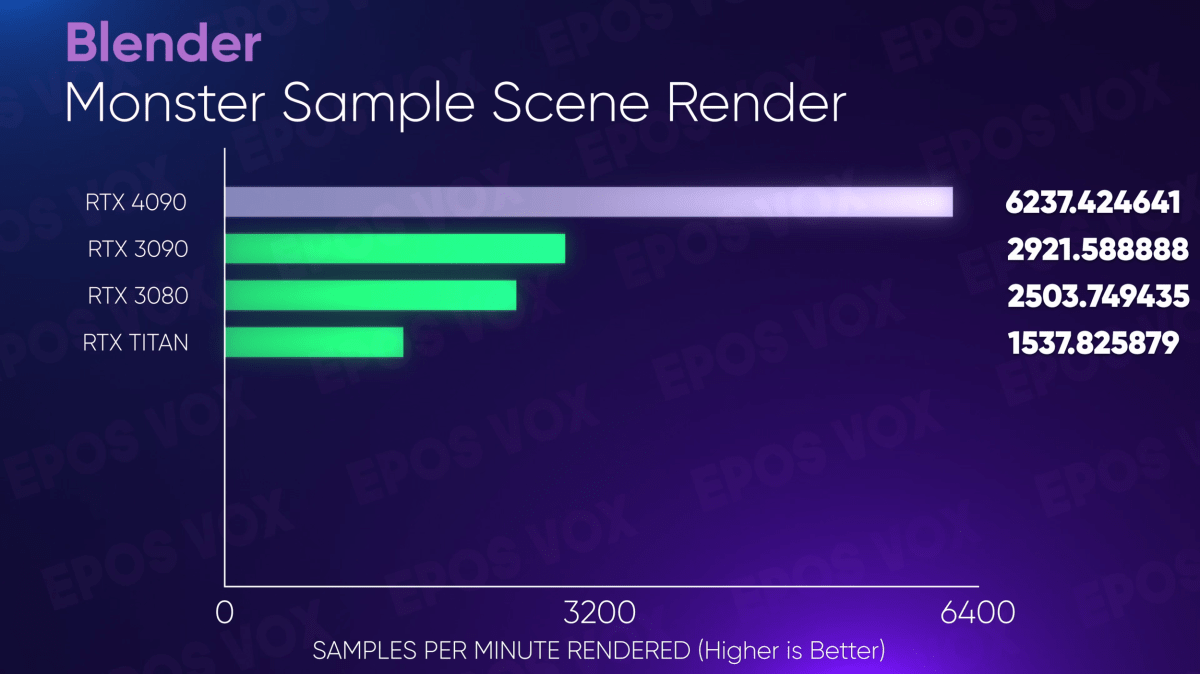

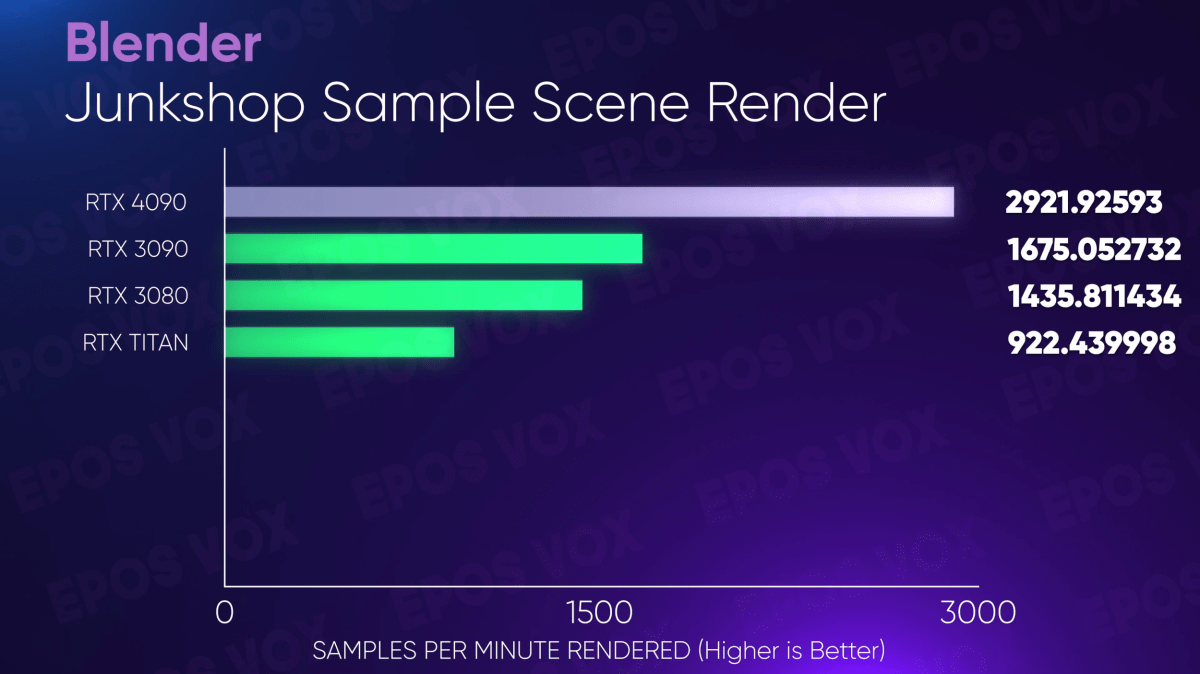

Alright, the daring “2X Quicker” claims are right here. I needed to check 3D workflows on my Threadripper Professional rig, since I’ve been getting increasingly into these new instruments in 2022.

Adam Taylor/IDG

Adam Taylor/IDG

Testing Blender, each the Monster and Classroom benchmark scenes have the RTX 4090 rendering twice as quick because the RTX 3090, with the Junkshop scene rendering simply shy of 2X quicker.

Adam Taylor/IDG

This interprets not solely to quicker last renders—which at scale is completely huge—however a a lot smoother artistic course of as the entire precise preview/viewport work will probably be extra fluid and responsive, too, and you may extra simply preview the ultimate outcomes with out ready perpetually.

Adam Taylor/IDG

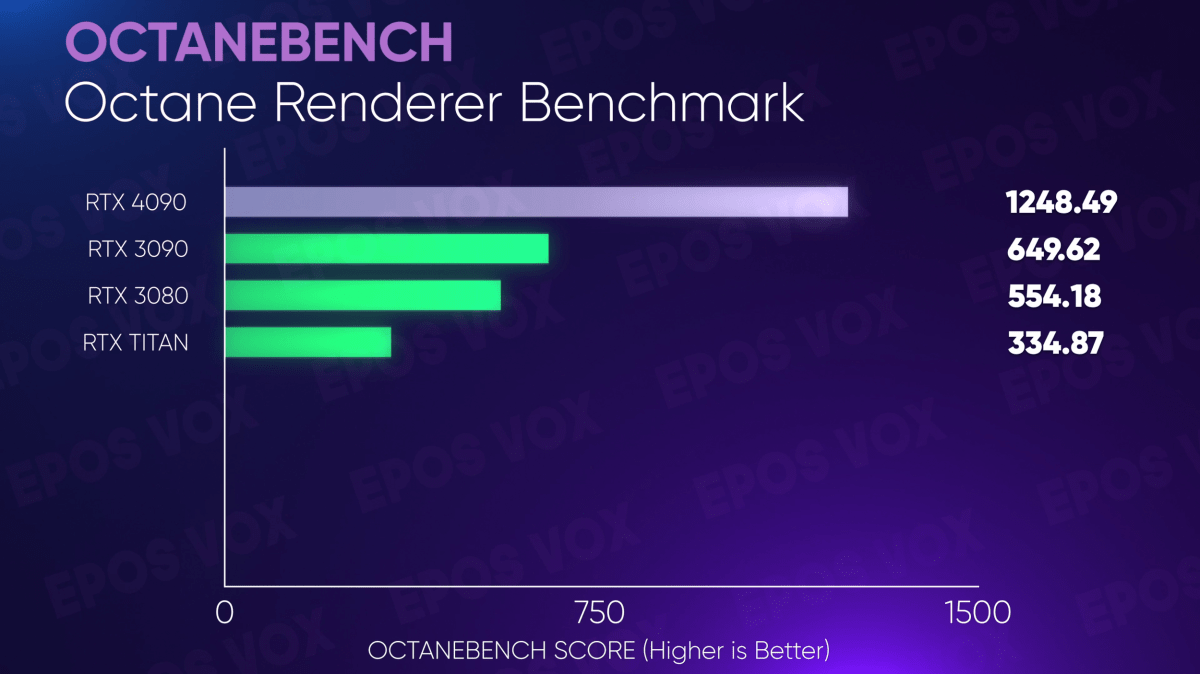

Benchmarking Octane—a renderer utilized by 3D artists and VFX creators in Cinema4D, Blender, and Unity—once more has the RTX 4090 working twice as quick because the RTX 3090.

Adam Taylor/IDG

…and once more, the identical goes for V-Ray in each CUDA and RTX workflows.

Backside line: The GeForce RTX 4090 presents excellent worth to content material creators

That’s the place the worth is. The Titan RTX was $2,500, and it was already phenomenal to get that efficiency for $1,500 with the RTX 3090. Now for $100 extra, the GeForce RTX 4090 runs laps round prior GPUs in ways in which have really game-changing impacts on workflows for creators of every kind.

This would possibly clarify why the formerly-known-as-Quadro line of playing cards acquired far much less emphasis over the previous few years, too. Why purchase a $5,000+ graphics card when you may get the identical efficiency (or extra, Quadros have been by no means tremendous quick, they simply had a lot of VRAM) for $1,600?

Clearly the pricing of the $1,200 RTX 4080 and not too long ago un-launched $899 4080 12GB can nonetheless be regarding till we see unbiased testing numbers, however the GeForce RTX 4090 would possibly simply be the primary time advertising has boasted of “2x quicker” efficiency on a product and I really feel like I’ve truly acquired that promise. Particularly for outcomes inside my area of interest work pursuits as an alternative of mainstream instruments or gaming? That is superior.

Pure players in all probability shouldn’t spend $1,600 on a graphics card, except feeding a excessive refresh charge 4K monitor with no compromises is a purpose. However when you’re eager about getting actual, nitty-gritty content material creation work executed quick, the GeForce RTX 4090 can’t be beat—and it’ll make you grin from ear to ear throughout any late-night Name of Obligation classes you hop into, as nicely.