If a brand new telephone gave me an occasional electrical shock, I wouldn’t suggest it. Even when it solely shocked me often, after I open a selected app, I might say no. If a telephone wasn’t simply unhealthy, however shockingly dangerous, I’d say that telephone, or at the very least the electrical shock half, ought to be eliminated.

I simply spent a pair weeks with the Google Pixel 9a, which has a device known as Pixel Studio, accessible on all of Google’s newest Pixel telephones. Pixel Studio is an AI-powered picture generator that creates photos from a textual content immediate. Till not too long ago, Pixel Studio refused to depict folks, however Google eliminated these guardrails, and the outcomes predictably reinforce stereotypes. That’s not simply unhealthy, that’s dangerous.

I’m asking Google – and all telephone makers – to cease providing picture mills that make photos of individuals. These instruments can result in bigotry.

Let’s attempt a fast function play: You be the Pixel Studio, and I might be me. Hey, Pixel Studio: Make me a picture of a profitable particular person!

What picture will you make? What do you see in your thoughts if you consider success? Is it somebody who seems to be such as you? The reply might be completely different for everyone, relying by yourself view of success.

Not for Pixel Studio. Pixel Studio has a singular imaginative and prescient of a profitable particular person. Except you occur to be a younger, white, able-bodied man, Pixel Studio most likely doesn’t see you when it envisions success.

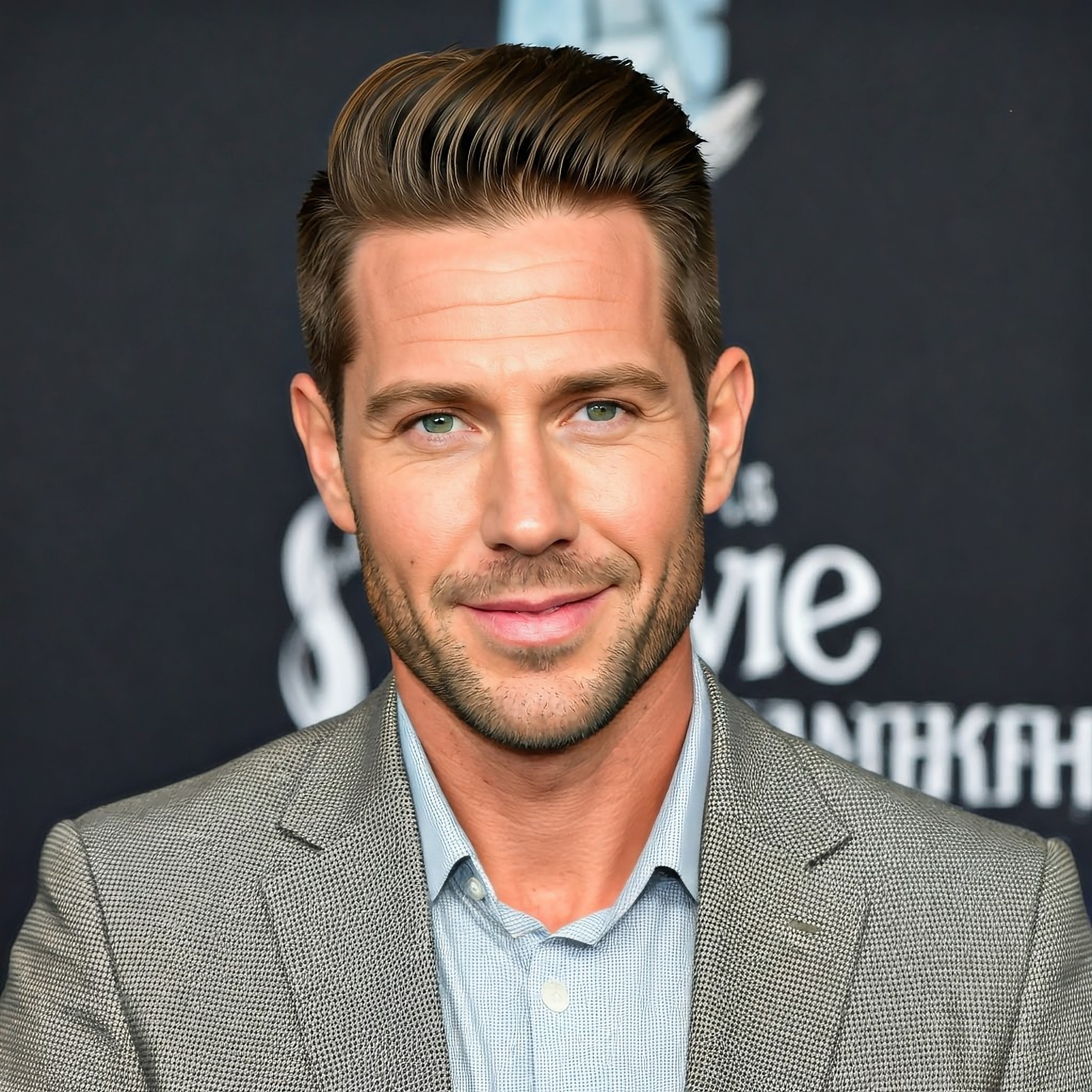

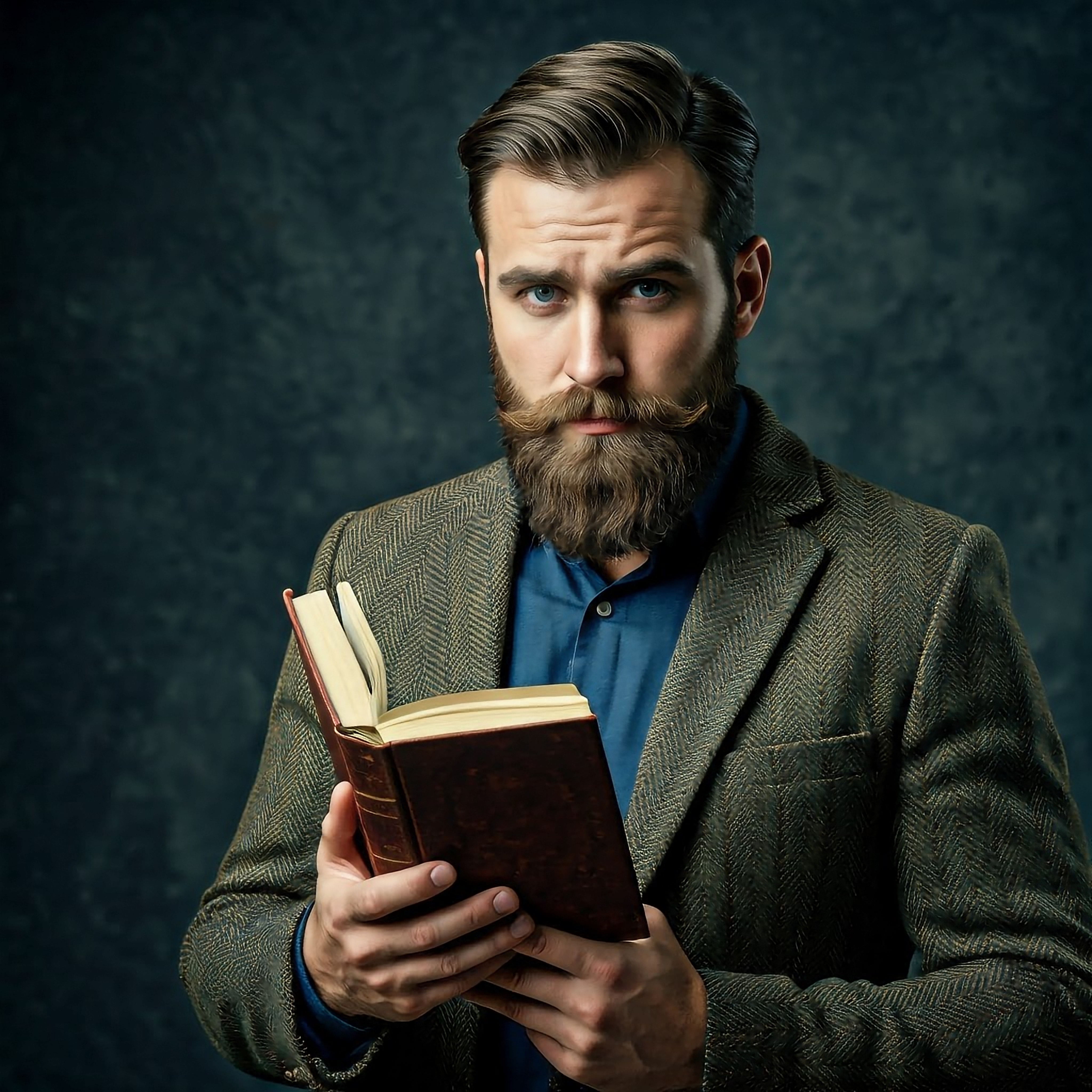

This is what the Pixel 9a thinks a profitable particular person really seems to be like

I requested Pixel Studio 5 instances for a picture of ‘a profitable particular person.’ Of the 5 folks Pixel Studio created for me, zero had been older. None used a wheelchair or listening to aids, or a cane.

All of them wore expensive-looking fits, even the girl. That’s proper, only one lady and 4 males. And sure, all of them had been white.

I’ve a major problem with this as a result of the Pixel’s digital mind is clearly rooted in lazy stereotypes. These stereotypes help misogyny, ableism, racism, ageism, and who is aware of what different biases.

That is ingrained within the Pixel’s pondering. If you happen to use the Pixel 9a to be extra profitable, it is best to know that it has a really restricted, stereotypical concept of success. Each time the Pixel telephone represents success in its solutions, it could be coloured by this bigotry.

In Pixel Studio’s slim world, success means you might be younger, white, able-bodied, most likely a person, and rich. Apparently, no one profitable is previous, non-white, disabled, transgender, or bored with flashy fits or materials wealth, amongst numerous various traits a profitable particular person might need.

Did I simply get unfortunate? I requested Pixel Studio 5 instances, then I requested twice extra after I realized it solely created one lady. I received one other white man and a girl who regarded like she may be Latina or of Center Jap descent. Each younger, standing tall, and carrying fits.

I’m not simplifying something; Pixel Studio is simplifying issues all the way down to essentially the most primary, biased denominator. That is baked into how these AI instruments be taught.

Pixel Studio generates stereotypes as a result of that is the way it’s presupposed to work

Initially, AI coaching knowledge was principally taken from the Web and public boards. The information inevitably mirrors the biases of the messy, unequal world that created it.

There was no concerted effort to fight stereotypes or introduce variety into the coaching knowledge. AI firms like Google merely hoovered up the whole lot they might discover, apparently with out a lot thought relating to the biases shaping the info itself. That taints all the mannequin from the bottom up.

Second, machine studying seems to be for patterns and teams issues collectively. That is not all the time a foul factor. When a pc seems to be for patterns and teams, for example, letters and phrases collectively, you get language and ChatGPT.

Apply that pattern-matching to folks’s appearances, and voilà: stereotypes. That’s just about the definition of a stereotype.

Merriam-Webster defines a stereotype as “one thing conforming to a hard and fast or common sample.” The basic manner machine studying works reinforces stereotypes. It’s virtually unavoidable.

Lastly, machine studying instruments are educated by us – customers who’re requested a easy query after every response: was this response? We don’t get to say if the response is true, correct, truthful, or dangerous. We solely get to inform the AI if the response is sweet or unhealthy. Meaning we’re coaching the AI on our intestine reactions – our personal ingrained stereotypes.

A picture conforming to our biases feels comfortably acquainted, or good. A response that defies our expectations will trigger cognitive stress. Except I am actively attempting to deconstruct my biases, I will inform the machine it’s doing job when it reinforces stereotypes I imagine.

Stereotypes are unhealthy, mmmmkay?

Let’s be clear: stereotypes are poison. Stereotypes are a root explanation for a number of the largest issues our society faces.

Stereotyping reduces various teams of individuals into easy, often adverse and unsightly caricatures. That makes it simpler to really feel just like the group doesn’t belong with the remainder of us. This results in prejudice and discrimination. There isn’t a profit that comes from stereotyping.

This is not simply philosophical hand-wringing; stereotyping causes actual hurt. Individuals who really feel discriminated towards expertise extra well being issues like heart problems and hypertension. Docs who stereotype sufferers supply a decrease commonplace of care with out realizing they’re inflicting hurt.

The type of stereotypical pondering mirrored in these AI photos contributes to hiring discrimination, wage gaps between completely different teams in the identical jobs, and a lack of alternatives at greater stage positions for marginalized teams.

After we have a look at assaults on variety, fairness and inclusivity, we should draw a depressingly straight line that passes via the Pixel Studio’s slim imaginative and prescient of ‘success’ to real-world bigotry.

It’s ironic that these options are a part of so-called Synthetic Intelligence, as a result of they exhibit a profound lack of precise intelligence.

What ought to the AI do, and what ought to we do concerning the AI?

This egg is rotten and must be tossed

If you happen to requested me, an clever human, to attract a profitable particular person, I’d say that’s not possible as a result of success isn’t a attribute that defines the best way an individual seems to be. I can’t simply draw success, I must know extra earlier than I can create that picture. Any try to create a picture from simply the phrase ‘success’ could be dumb.

However AI is not meant to be clever. It is designed to be a mirrored image of us – to present us what we would like. It is designed to strengthen our stereotypes so that we’ll pat it on the pinnacle and say “good job, Pixel Studio!” whereas we share these drained photos.

I requested Google if it had any issues concerning the outcomes I received from Pixel Studio. I requested if it is an issue that the Pixel Studio reinforces adverse stereotypes? And if this downside can’t be solved, would Google take into account once more eradicating the flexibility to make photos with folks? I requested these questions a few weeks in the past and Google has not responded.

This isn’t a chicken-and-egg query. It doesn’t matter whether or not the picture generator creates the stereotype or just displays it. This egg is rotten and must be tossed. All the eggs this hen lays might be rotten. Let the AI play Tic Tac Toe and depart folks alone.